Brief description

We propose a new approach for vision-based gesture recognition to support robust and efficient human robot interaction towards developing socially assistive robots. The considered gestural vocabulary consists of five, user specified hand gestures that convey messages of fundamental importance in the context of human-robot dialogue. Despite their small number, the recognition of these gestures exhibits considerable challenges. Aiming at natural, easy-to-memorize means of interaction, users have identified gestures consisting of both static and dynamic hand configurations that involve different scales of observation (from arms to fingers) and exhibit intrinsic ambiguities. Moreover, the gestures need to be recognized regardless of the multifaceted variability of the human subjects performing them. Recognition needs to be performed online, in continuous video streams containing other irrelevant/unmodeled motions. All the above need to be achieved by analyzing information acquired by a possibly moving RGBD camera, in cluttered environments with considerable light variations. We present a gesture recognition method that addresses the above challenges, as well as promising experimental results obtained from relevant user trials.

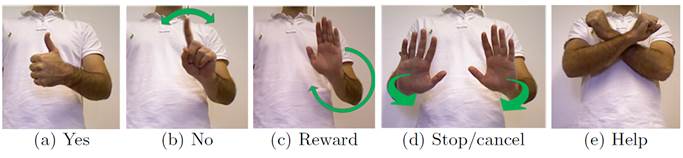

Illustration of the supported gestures. The correspondence between gestures and physical actions of hands/arms are as follows: (a) “Yes": A thumb up hand posture. (b) “No": A sideways waiving hand with extended index finger. (c) “Reward": A circular motion of an open palm at a plane parallel to the image plane. (d) “Stop/cancel": A two-handed push forward gesture. (e) “Help": two arms in a cross configuration.

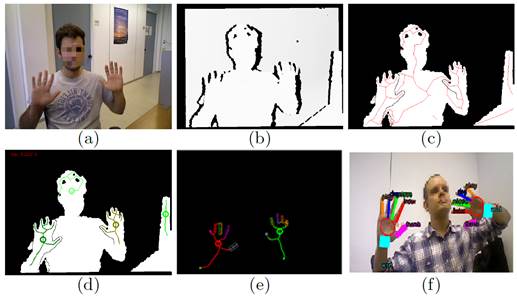

Illustration of intermediate results for hand detection. (a) Input RGB frame. (b) Input depth frame It. (c) The binary mask Mt where far-away structures have been suppressed and depth discontinuities of It appear as background pixels. Skeleton points St are shown superimposed (red pixels). (d) A forest of minimum spanning trees is computed based on (c), identifying initial hand hypotheses. Circles represent the palm centers. (e) Checking hypotheses against a simple hand model facilitates the detection of the actual hands, filtering out wrong hypotheses. (f) Another example showing the detection results (wrist, palm, fingers) in a scene with two hands.

Sample results

A video with gesture recognition experiments.

Contributors

- Michel Damien, Konstantinos Papoutsakis, Antonis Argyros.

Relevant publications

- D.Michel, K. Papoutsakis, A.A. Argyros, “Gesture recognition for the perceptual support of assistive robots”, International Symposium on Visual Computing (ISVC 2014), Las Vegas, Nevada, USA, Dec. 8-10, 2014.

The electronic versions of the above publications can be downloaded from my publications page.