Brief description

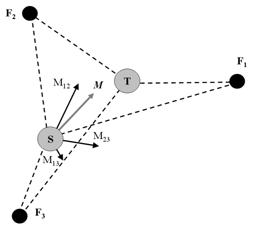

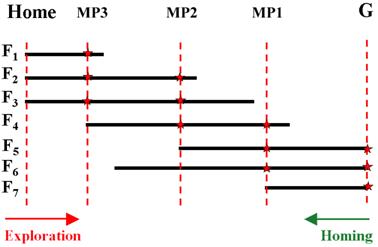

Taking into account the fact that vision and perception is not the goal but the means, the focus is shifted from studying what an artificial system can perceive through vision, to studying what an artificial system really needs to perceive in order to fulfill its goals. This shift of focus constitutes is important because perceptual processes are not studied in isolation but in the context of the goals, the environment and the behaviors of an artificial system. In this framework we propose a novel, vision-based method for robot homing, the problem of computing a route so that a robot can return to its initial “home” position after the execution of an arbitrary “prior” path. The method assumes that the robot tracks visual features in panoramic views of the environment that it acquires as it moves. By exploiting only angular information regarding the tracked features, a local control strategy moves the robot between two positions, provided that there are at least three features that can be matched in the panoramas acquired at these positions. The strategy is successful when certain geometric constraints on the configuration of the two positions relative to the features are fulfilled. In order to achieve long-range homing, the features’ trajectories are organized in a visual memory during the execution of the “prior” path. When homing is initiated, the robot selects Milestone Positions (MPs) on the “prior” path by exploiting information in its visual memory. The MP selection process aims at picking positions that guarantee the success of the local control strategy between two consecutive MPs. The sequence of successive MPs successfully guides the robot even if the visual context in the “home” position is radically different from the visual context at the position where homing was initiated. Experimental results from a prototype implementation of the method demonstrate that homing can be achieved with high accuracy, independent of the distance traveled by the robot. The contribution of this work is that it shows how a complex navigational task such as homing can be accomplished efficiently, robustly and in real-time by exploiting primitive visual cues. Such cues carry implicit information regarding the 3D structure of the environment. Thus, the computation of explicit range information and the existence of a geometric map are not required.

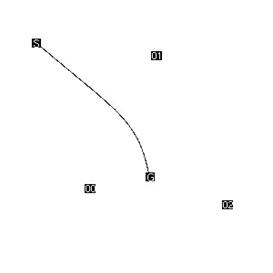

An illustration of the robot homing problem. The robot starts at position A and moves until it reaches some position T. The problem is then to return back to its original home position A.

The method employs a local control strategy that permits the robot to move between two adjacent positions with the aid of angular information regarding at least three landmarks that need to be identified and corresponded between the two views. More information on this control strategy can be found in the related papers (see at the bottom of the page). It turns out that by employing this control strategy, the robot can move between two advacent positions, although not necessarily on a straight line. The control strategy can be generalized for more than one landmarks.

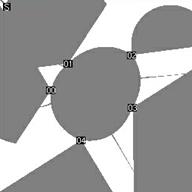

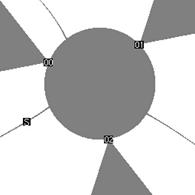

The properties of the proposed control strategy have been studied and its reachability area has been identified. In the above figure, you may see with gray color the reachability area of the control strategy for the cases of three and five landmarks, respectively. This means that the robot can reach any gray point on the plane, regardless of the starting position, by employing the proposed control strategy. Note that the convex hull of landmarks is always reachable.

Our approach to homing exploits the proposed local control strategy in order to build a visual memory. This way, the robot solves the homing problem by employing the proposed local control law between automatically defined milestone positions. The required feature matching is achieved through KLT corner tracking (in the examples seen below) or by employing the home-built color tracker. More related results can be found on the more detailed page on angle-based robot navigation.

Sample results

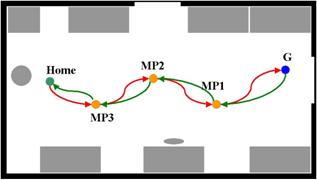

The above is the layout of the space where a homing experiment has been conducted.

In the above video you may see a video from the homing experiment. The circular mark on the floor is used to indicate the accuracy of the proposed homing method in reaching the home position.

Contributors

Antonis Argyros, Kostas Bekris, Stelios Orphanoudakis, Lydia Kavraki.

Relevant publications

- A.A. Argyros, C. Bekris, S.C. Orphanoudakis, L.E. Kavraki, “Robot Homing by Exploiting Panoramic Vision”, Journal of Autonomous Robots, vol. 19, no. 1, pp. 7-25, July 2005.

- K. E. Bekris, A.A. Argyros, L. E. Kavraki, “Angle-Based Methods for Mobile Robot Navigation”, Lecture Notes in Computer Science, “Imaging beyond the Pinhole Camera”, K. Daniilidis, R. Kleete, (editors), in press.

- K. E. Bekris, A.A. Argyros, L. E. Kavraki, “Angle-Based Methods for Mobile Robot Navigation: Reaching the Entire Plane”, in proceedings of the International Conference on Robotics and Automation (ICRA‘04), pp. 2373-2378, New Orleans, USA, April 26 - May 1st, 2004.

- A.A. Argyros, C. Bekris, S. Orphanoudakis, “Robot Homing based on Corner Tracking in a Sequence of Panoramic Images”, in proceedings of the Computer Vision and Pattern Recognition Conference (CVPR‘01), pp. 3-10, Hawaii, USA, December 11-14, 2001.

The electronic versions of the above publications can be downloaded from my publications page.