Brief description

We propose a new hybrid method for 3D human body pose estimation based on RGBD data. We treat this as an optimization problem that is solved using a stochastic optimization technique. The solution to the optimization problem is the pose parameters of a human model that register it to the available observations. Our method can make use of any skinned, articulated human body model. However, we focus on personalized models that can be acquired easily and automatically based on existing human scanning and mesh rigging techniques. Observations consist of the 3D structure of the human (measured by the RGBD camera) and the body joints locations (computed based on a discriminative, CNN-based component). A series of quantitative and qualitative experiments demonstrate the accuracy and the benefits of the proposed approach. In particular, we show that the proposed approach achieves state of the art results compared to competitive methods and that the use of personalized body models improve significantly the accuracy in 3D human pose estimation.

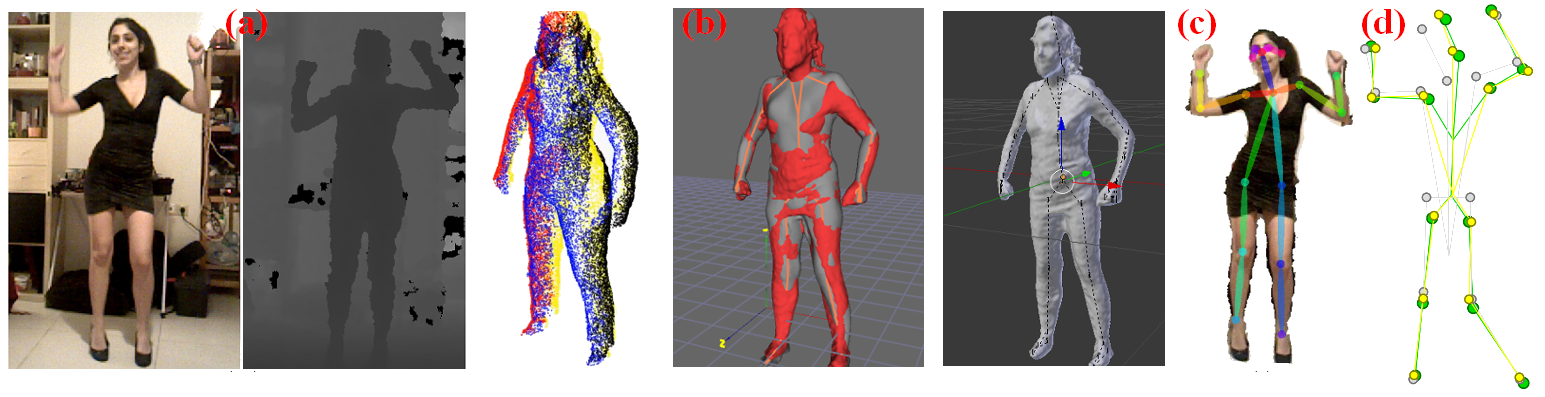

The proposed approach for 3D human pose estimation operates as follows. (a) The input to the method is a sequence of RGBD frames. (b) We leverage on personalized, parametric, skinned human body models that can be acquired easily. (c) We also employ OpenPose for the estimation of 2D human body joints. We define a hybrid approach for fitting the model (b) in the observations (a) given the hints provided by the 2D joints estimation (c). The result of this process is visualized in (d). The green skeleton is the ground truth, the gray is the neural network suggestion and the yellow is the result of the proposed approach. Although the neural network estimation is far from the ground truth, the proposed method manages to estimate accurately the 3D human pose because of the high quality model and the utilization of depth information.

Sample results

Dataset

The quantitative evaluation of our method requires a dataset containing RGBD frames of moving humans, together with ground truth regarding their 3D motion as well as their personalized 3D skinned model. In this direction, we constructed a synthetic dataset as follows. We scanned four different subjects (two male and two female). The four subjects differ significantly with respect to their sizes (height, weight, Body Mass Index). We then collected motion capture data from the CMU dataset, including a variety of motions like bending, jumping jacks, simultaneous twisting of torso and limbs, etc. Finally, we rendered the models in the laboratory environment of the MHAD dataset. Dual quaternion blending was used to realize the skin deformations of the models to avoid the ``candy-wrapping'' artifacts produced by standard linear blending. Thus, we obtained RGBD frames of known human models performing known, complex motions in a realistic environment. The final result is four RGBD sequences of 720 frames each, of the same motions, performed by two male and two female subjects. The dataset is available by downloading this file (~0.9 GBytes).

Contributors

- Ammar Qammaz, Damien Michel, Antonis A. Argyros.

- This work has been supported by the EU projects Co4Robots and ACANTO.

Relevant publications

- A. Qammaz, D. Michel and A.A. Argyros, "A Hybrid Method for 3D Pose Estimation of Personalized Human Body Models", In IEEE Winter Conference on Applications of Computer Vision (WACV 2018), Lake Tahoe, NV, USA, March 2018.

The electronic versions of the above publications can be downloaded from my publications page.