Brief description

We propose a method that relies on markerless visual observations to track the full articulation of two hands that interact with each-other in a complex, unconstrained manner. We formulate this as an optimization problem whose 54-dimensional parameter space represents all possible configurations of two hands, each represented as a kinematic structure with 26 Degrees of Freedom (DoFs). To solve this problem, we employ Particle Swarm Optimization (PSO), an evolutionary, stochastic optimization method with the objective of finding the two-hands configuration that best explains the RGB-D observations provided by a Kinect sensor. To the best of our knowledge, the proposed method is the first to attempt and achieve the articulated motion tracking of two strongly interacting hands. Extensive quantitative and qualitative experiments with simulated and real world image sequences demonstrate that an accurate and efficient solution of this problem is indeed feasible.

From a methodological point of view, the proposed approach combines the merits of two recently proposed methods for tracking hand articulations. More specifically, we have proposed a method for tracking the full articulation of a single, isolated hand based on the RGB-D data provided by a Kinect sensor (BMVC 2011). We extend this approach so that it can track two strongly interacting hands. In another recent work we track a hand interacting with a known rigid object (ICCV 2011). There, the fundamental idea is to model hand-object relations and to treat occlusions as a source of information rather than as a complicating factor. We extend this idea by demonstrating that it can be exploited effectively in solving the much more complex problem of tracking two articulated objects (two hands). Additionally, this more complex problem is solved based on input provided by a compact Kinect sensor, as opposed to the multicamera calibrated system employed in the ICCV 2011 work.

Experimental results demonstrate that the accuracy achieved in two hands tracking is in the order of 6mm, in scenarios involving complex interaction between two hands.

Graphical illustration of the proposed method. By masking the depth information (b), with a skin color detection performed upon RGB data (a), a depth map (c) of image regions orresponding to hands is extracted, from Kinect input. The proposed method fits the 54-D joint model of two hands (d) onto these observations, thus recovering the hand articulation that best explains the observations (e).

Sample results

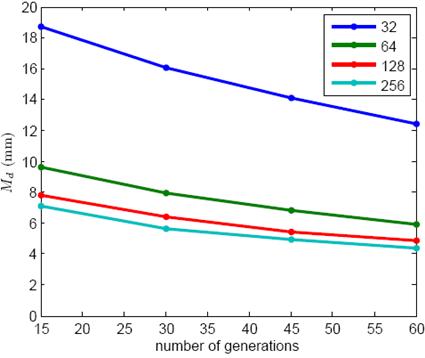

Quantitative evaluation of the performance of the method with respect to the PSO parameters.

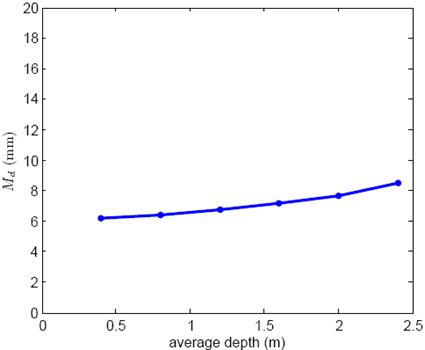

Quantitative evaluation of the performance of the method with respect to the average distance from the sensor.

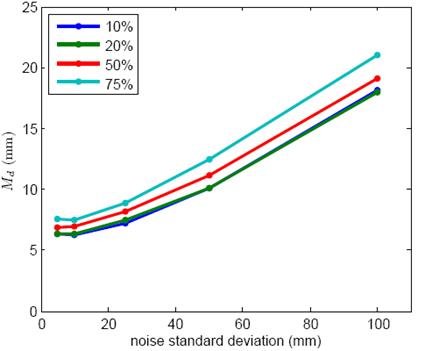

Quantitative evaluation of the performance of the method with respect to depth and skin-color detection noise.

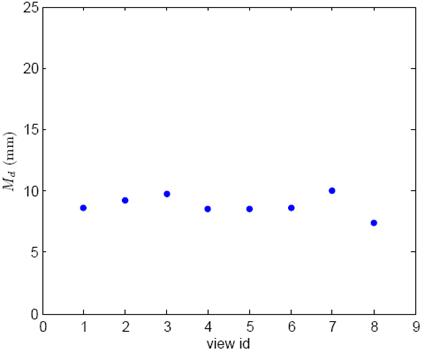

Quantitative evaluation of the performance of the method with respect to viewpoint variation.

A video with sample qualitative experiments.

Contributors

- Iason Oikonomidis, Nikolaos Kyriazis, Antonis Argyros.

- This work was partially supported by the IST-FP7-IP-215821 project GRASP and Robohow.cog.

Relevant publications

- I. Oikonomidis, N. Kyriazis and A.A. Argyros, “Tracking the articulated motion of two strongly interacting hands”, to appear in the Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2012, Rhode Island, USA, June 18-20, 2012.

- I. Oikonomidis, N. Kyriazis and A.A. Argyros, “Efficient model-based 3D tracking of hand articulations using Kinect”, in Proceedings of the 22nd British Machine Vision Conference, BMVC’2011, University of Dundee, UK, Aug. 29-Sep. 1, 2011.

- I. Oikonomidis, N. Kyriazis and A.A. Argyros, “Markerless and Efficient 26-DOF Hand Pose Recovery”, in Proceedings of the 10th Asian Conference on Computer Vision, ACCV’2010, Part III LNCS 6494, pp. 744–757, Queenstown, New Zealand, Nov. 8-12, 2010.

- I. Oikonomidis, N. Kyriazis and A.A. Argyros, “Full DOF tracking of a hand interacting with an object by modeling occlusions and physical constraints”, in Proceedings of the 13th IEEE International Conference on Computer Vision, ICCV’2011, Barcelona, Spain, Nov. 6-13, 2011.

- N. Kyriazis, I. Oikonomidis, A.A. Argyros, “A GPU-powered computational framework for efficient 3D model-based vision”, Technical Report TR420, Jul. 2011, ICS-FORTH, 2011.

The electronic versions of the above publications can be downloaded from my publications page.